From Load to Prediction

Is it time to change our underlying model of Human Cognitive Architecture? I ask the difficult question - is it time to shift Cognitive Load Theory's fundamental theoretical base?

This post is intended as a part of an ongoing scientific conversation, responding directly to Paul Kirschner’s reflection on John Sweller’s recent paper (Sweller 2023), revisiting Cognitive Load Theory (CLT). With Kirschner, I highly value Sweller's work and respect his willingness to refine his theory in response to new findings. However, I believe it’s time to ask a more challenging question: Are we stretching a model beyond its limits to keep it viable, when Predictive Processing/Active Inference theory might already offer a simpler and more coherent support for instructional theory and practice?

Cognitive Load Theory and Its Limits

Cognitive Load Theory (CLT) proposes a model of learning centred around the concept of cognitive load, grounded in a specific view of human cognitive architecture. This information processing model, based on decades of research in educational psychology, assumes that working memory is severely limited in both capacity and duration, while long-term memory is functionally unlimited. External stimuli, prompt transfers from long-term memory to working memory and transfers and updates back to long-term memory are the learning process.

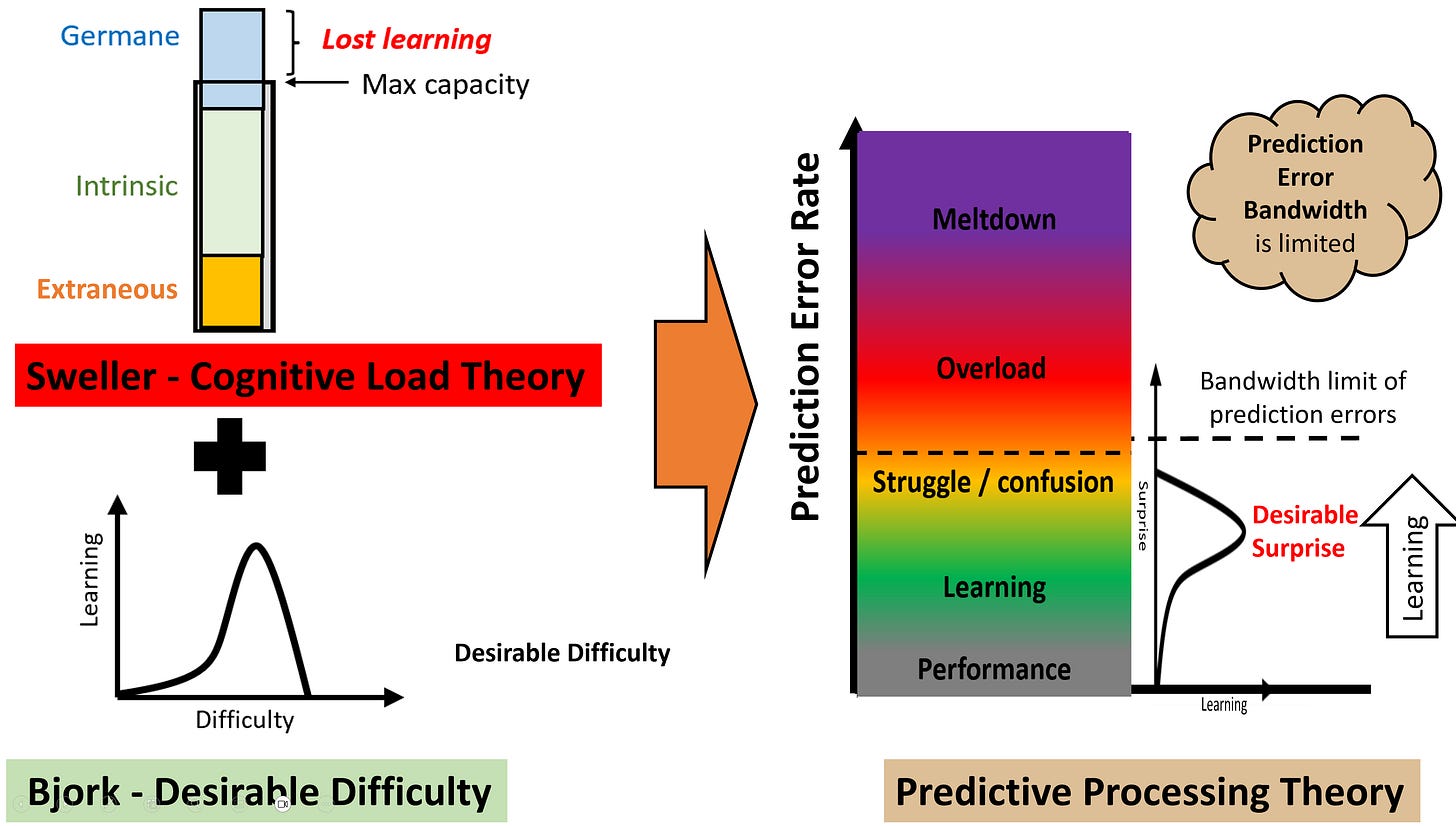

According to CLT, learning is most effective when instructional design takes into account the amount and type of load imposed on working memory. To aid in this, cognitive load is typically divided into three categories: intrinsic load (inherent to the complexity of the material), extraneous load (caused by poor instructional design), and germane load (resources devoted to schema construction and automation). This framework has helped explain and predict a range of instructional phenomena, including the worked example effect, split attention effect, redundancy effect, expertise reversal effect, modality effect, and others. From these, CLT has generated a set of instructional strategies aimed at improving learning outcomes by minimising extraneous load and managing intrinsic load, so that learners can effectively process the demands of germane load. The core heuristic underpinning CLT is clear: because our capacity to handle cognitive load is small, instructional materials should be carefully designed to avoid overwhelming learners, ensuring that their limited cognitive resources are used to support learning rather than manage unnecessary complexity.

In his recent excellent blog When Failure Feeds the Flame, Kirschner reviews Sweller’s recent paper The Development of Cognitive Load Theory: Replication Crises and Incorporation of Other Theories Can Lead to Theory Expansion ( Sweller 2023). He notes that Sweller's work models excellent scientific practice: reflecting where there are conceptual replication failures in empirical testing, this can prompt revision and development of the theory, moving it forward.. For example, when inconsistencies in empirical experimental data led to CLT being modified to include the expertise reversal effect and the integration of considerations of biological primary and secondary knowledge, amongst others.

This post follows that same spirit of reflection. I ask, "at what point should scientists, faced with ongoing conceptual replication failures, decide to rework or replace their underlying explanatory framework?”

In his 2023 paper, Sweller reiterates CLT’s foundation, which he refers to as the human cognition framework: the brain as an information processing system. It transfers information from long-term memory into a limited-capacity working memory to process external input, and then transfers new information back to long-term memory. The various learning effects CLT describes, such as worked examples and redundancy, derive from this architecture.

However, empirical findings often revealed mismatches. For example, prior knowledge strongly affects working memory limits and load, suggesting that the theory’s core model doesn’t fully explain learning differences. Although CLT incorporated many effects, these refinements did not change the underlying system architecture. It has acknowledged other factors at play but hasn’t explained how they integrate into the core human cognitive architecture model.

From Information Processor to Prediction Machine

Meanwhile, advances across neuroscience, psychology, and philosophy in the last 3 decades have transformed our understanding of the human cognition framework. An increasingly dominant view is that human cognitive architecture follows a hierarchical Bayesian generative model. A framework in which the brain minimises prediction error through descending predictions and ascending precision-weighted prediction errors. This is a significant shift from view of the brain as a passive information processor to one of the brain body system being a prediction machine.

The highly related Predictive Processing and Active Inference theories capture this new paradigm and are becoming the dominant and unifying framework across many neuro science disciplines, with the notable exception of education.

I propose that it is time to replace the aged information-processing model of human cognition with a theory built around Predictive Processing / Active Inference theory.

What Is Predictive Processing/Active Inference Theory?

In Predictive Processing/Active Inference, the brain constantly builds and refines a hierarchical generative model, a probabilistic structure designed to minimise prediction error across all sensory modalities. This model generates predictions about hidden causes of incoming sensory data and selects among potential policies (sequences of actions) to minimise future surprise (i.e., minimise future prediction errors). This has been a successful evolved adaptation to ensure survival best. (Friston, 2010) I think it is helpful to think of our brain (and body system) as a Prediction Machine. This machine is constructed from a network of neurons linked to our senses and our effector organs. It doesn’t sense, process, store and retrieve, it just predicts and corrects. It’s helpful to think of it as prediction machinery, and learning is a re-engineering/reconfiguring of the machinery to predict better in the future.

If we consider the image above of shadows on a curtain as a metaphor for our sensing of the world through our senses. All we actually know is areas of light and dark, our brains infer a “hidden state” to explain and predict what will make that pattern. We create a hidden state that we label a “cat”, and another that we might label “window bars”. We infer properties of cats and window bars from our repeated experiences. Based on what has just been happening we use our stored rich generative model to predict what our sensory input should look like next. The patterns associated with the cat may shift across the scene. Those associated with the bars will stay static. If what we predict doesn’t quite match, we adjust our predictions to align with our sensory input.

What we call schema, mental models, episodic memories, and skills are not separate representational systems. They are just different levels or functional aspects of the same underlying generative model, parts of the prediction machine.

This is a fundamental paradigm shift in how we view our minds/brain body systems.

For a more detailed explanation, see my Substack post, “Teachers Are Prediction Error Managers”. For accessible talks, explore Anil Seth’s “Is Reality a Controlled Hallucination?”, Andy Clark’s “How the Brain Shapes Reality,” and Karl Friston’s “Active Inference in the Brain.”

The Problem with Patchwork

Over time, CLT has had to add new constructs to explain anomalies — element interactivity, expertise reversal, desirable difficulty, and more. But this growing complexity begins to look like patchwork. We can reframe these issues more clearly through Predictive Processing/Active Inference.

Limited Working Memory vs. Limited Prediction Error Bandwidth

CLT holds that working memory is limited and can be overloaded. Load is divided into intrinsic, extraneous, and sometimes germane types.

Predictive Processing/Active Inference offers a different view. The brain predicts sensory input using its generative model. When predictions match, no update is needed. When they don’t, prediction errors signal the need for adjustment. Small errors lead to parameter updates; large, persistent errors trigger model updates. This is learning.

Neurobiology studies(Vezoli, J 2021, Dileep George et al.,2025) show that fewer neurons carry error signals upwards than predictions downwards, creating a bandwidth limit for prediction errors. Too many unresolved errors overwhelm the system, leading to confusion and dysregulation.

In short, learning is constrained not by working memory load but by the rate of manageable prediction errors (Friston 2017). Prior knowledge makes environments more predictable, reducing errors. CLT observed that working memory limits depended heavily on prior knowledge; Predictive Processing/Active Inference explains why.

On Extraneous Load

CLT defines extraneous load as cognitive effort unrelated to the task. Predictive Processing/Active Inference sees extraneous prediction errors arising from unpredictable stimuli in the environment. If a distraction is predictable — like a steady ticking clock — it generates little error. But unexpected changes increase error rates.

This explains why children tolerate busy classroom walls when displays remain constant and predictable, but get distracted by sudden changes.

Prediction is not limited to external senses. The brain also tracks interoceptive and proprioceptive signals, as well as social dynamics. These factors contribute to the total prediction error rate, helping to explain the impact of health, sensory sensitivity, and anxiety on learning.

On Learning and Struggle

Kirschner highlights a paradox: increased struggle sometimes leads to better retention, contradicting CLT’s emphasis on reducing load. Predictive Processing/Active Inference resolves this.

Learning happens when persistent prediction errors trigger updates. As tasks become less predictable, more errors arise, requiring greater model adjustment. This feels like struggle. However, too much unpredictability overwhelms the system, halting learning. There is a sweet spot: enough error to drive learning, but not so much that the system shuts down.

This explains why interleaved practice outperforms blocked practice. In blocked practice, predictability is high, so error rates are low, and learning signals are weak. Interleaving introduces uncertainty, prompting more updates.

Similarly, retrieval practice works better than rereading because it generates surprise and prediction error. Variation theory also aligns well, deliberately controlling predictability to direct attention.

On the Expertise Reversal Effect

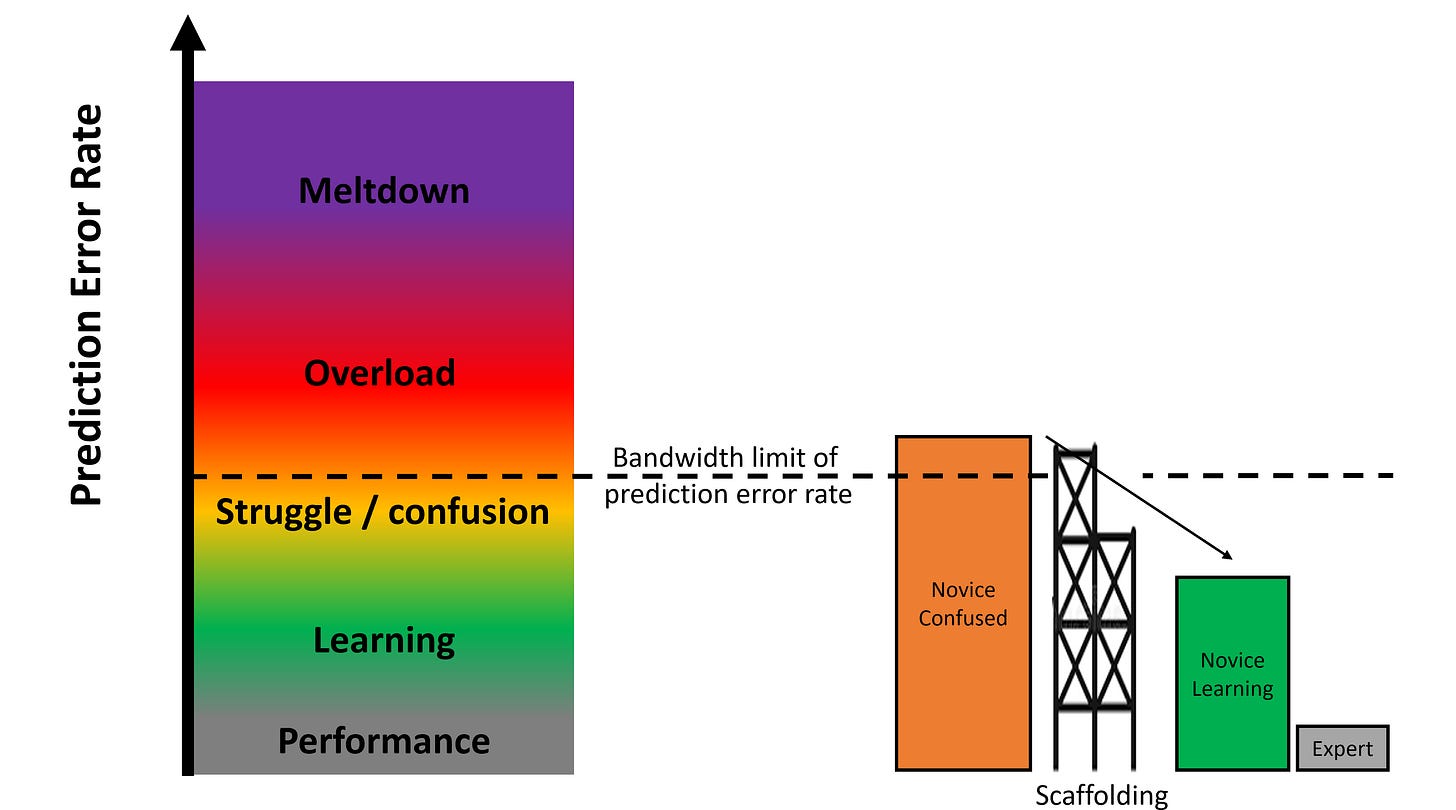

In my blog Expert and Novice Learning: Predictive Processing Insights I demonstrate how expert and novice learners experience different levels of prediction error on the same task. Expert learners have established generative models that can successfully predict their way through even the most complex tasks they are experts in. We use scaffolding, such as reverse fading, to make sufficient parts of the task predictable to bring down the novice learners’ prediction errors into the optimum range for learning. Note, we want to take care we don’t over-scaffold to reduce prediction errors to a level which will not trigger model updating ( learning), and we need to be aware expert learners in a particular task will not experience learning - they don’t need to as they already have good generative models.

A Unifying and Integrating Fundamental Theory of Cognition?

On the diagram below I have tried to illustrate how many of the teaching strategies can be linked to managing prediction error rates to the optimum levels for learning.

I feel this overriding heuristic of being aware of and managing predictability rather than load is very powerful for navigating our current busy patchwork of related instructional theories and strategies.

On Transference

Predictive Processing/Active Inference does not have a strong separation of short and long-term storage. The brain’s generative model is wired across more or less plastic areas. Fast-learning regions like the hippocampus establish temporary connections; durable learning requires transferring model updates to more stable areas via the processes of REM sleep and restful wakefulness, where long-term areas of relevant sections of the generative model are repeatedly stimulated with Long Term Potentiation(LTP) or Long Term Depression(LTD) signals to strengthen synapses, grow dendritic spines, or weaken synapses and prune back dendritic spines respectively. These processes grow/prune the updates to our generative model. As they are biochemical growth processes that involve chemical messaging cascades/ motor protein transport backwards and forwards along the long lengths of neurons, it happens on a horticultural timescale rather than a bioelectronic one, and gives a theoretical basis for how learning (long-term changes in the generative model) happens. This supports the phenomenon of the Ebbinghaus forgetting curve, practice/rehearsal, spacing, retrieval practice, sleep consolidation, and I believe would provide explanatory support for the apparent recovery in “working memory” after wakeful rest.

This deep dive into understanding our brains' very latest biochemical basis offers a rich basis for explaining retention, forgetting, and instructional design. I will share the findings of my secondary research in this area in a future blog.

Neural Plausibility

One of the reasons I am persuaded to adopt Predictive Processing/Active Inference is its growing neurobiological grounding, supported by computational psychology models. Neural evidence shows prediction error signals, hierarchical processing, and precision weighting at work in real cortical structures.

Walsh et. al in their 2020 paper “Evaluating the neurophysiological evidence for predictive processing as a model of perception” review key research to date. They acknowledge there is growing base of supportive empirical evidence, but do highlight some challenges with predicting and proving some of the unique features of predictive processing/active inference. Subsequent papers( Chao et al. 2022, Corticofugal study 2022, Mikulasch et al. 2022) are starting to address their concerns to provide a more robust evidence base.

CLT, by contrast, lacks clear neural correlates. Constructs like cognitive load and working memory capacity remain metaphors with very limited support for solid biological markers. This limits its long-term scientific utility.

Evolution, Learning, and Primary vs Secondary Knowledge

CLT uses an evolutionary argument to distinguish between biologically primary and secondary knowledge. Predictive Processing/Active Inference offers a stronger evolutionary account.

Organisms that predicted threats or rewards had survival advantages. Brains evolved not to process information, but to minimise surprise and prediction error. Over time, this machinery generalised across all sensory and motor systems.

This system builds knowledge hierarchically, from experience, forming what CLT calls biologically primary knowledge. But it also supports learning abstract, secondary knowledge through experience, modelling, and inference.

Instructional design, then, becomes the science of triggering manageable prediction errors to update students’ generative models. Our current best practices — explicit instruction, careful sequencing, modelling, correction, guided practice — align well with how the brain naturally learns.

Conclusion

As educators, we have a responsibility to ground our work in the best available understanding of how the brain learns. Predictive Processing/Active Inference theory offers a more neurally plausible and coherent framework than Cognitive Load Theory.

This does not invalidate the many instructional strategies developed under CLT. It provides a better explanation for why they work. Rather than continuing to patch an outdated architecture, we can adopt a stronger foundation. In addition, Predictive Processing/Active Inference can act as a fundamental human cognitive architecture theory that can unify the disparate set of instructional theories we have in education today.

Sweller’s openness to refinement is commendable. However, perhaps the next scientific step is not another extension of CLT, but a reframing of its base. Predictive Processing/Active Inference theory may offer exactly that.

Closing Thought - Who am I to challenge the giants of EduCognitive Science?

Fair question. My journey into this field hasn’t followed a traditional academic route—it’s been deeply personal and driven by a fierce desire to support my family. Between my partner and me, we have four children, collectively with six dyslexia diagnoses, three ADHD diagnoses, and two autism diagnoses. That kind of reality forces you to engage deeply with how humans think, learn, and experience the world.

With a background in high-tech engineering, I eventually transitioned into a teaching career, landing in a special school for autistic students. From day one, I found myself drawn to cognitive science, not just as a teacher, but as a father and stepfather trying to understand and advocate for the neurodivergent people I care most about. You could say it’s become a special interest of mine.

My initial foray was through cognitive load theory, the simple memory model, and Paivio’s dual coding theory. These helped me frame teaching strategies, but I still found gaps, particularly in explaining the unique challenges and strengths of my autistic students. Then I discovered Autism and the Predictive Brain by Peter Vermeulen. His clear, compelling introduction to predictive processing theory opened a new door. Suddenly, the traits I observed in my students, and in my own children, made more sense. Predictive processing offered not just explanations, but insight into why certain strategies actually worked.

That book was a turning point. I couldn’t understand why such a compelling model, which seemed to align so well not only with observable neurodivergent experience but with learning science in general, was virtually absent from mainstream educational discourse. That was three years ago. Since then, I’ve been on a deep dive into predictive processing and active inference, motivated by both professional curiosity and personal necessity.

I dare to make this challenge because I’m a firm believer that scientific discourse, constructive, evidence-based, and open, is the engine that drives progress. I feel confident in my understanding and application of predictive processing and active inference, not only through years of study and classroom practice, but because I’ve had the privilege of reviewing my thinking with leaders in the field. Scholars like Karl Friston, Chris and Uta Frith, and Thomas Parr have engaged with my work, offering thoughtful feedback and ideas for development. I come to this not as an “edupersonality” with a brand to develop, nor as an established education researcher, but as a practitioner committed to building better, more inclusive practice, especially for students whose cognitive profiles challenge the traditional models of learning.

References:

Chao OY, Nikolaus S, Yang YM, Huston JP. Neuronal circuitry for recognition memory of object and place in rodent models. Neurosci Biobehav Rev. 2022 Oct;141:104855. doi: 10.1016/j.neubiorev.2022.104855. Epub 2022 Sep 8. PMID: 36089106; PMCID: PMC10542956.

Dileep George et al.,(2025) A detailed theory of thalamic and cortical microcircuits for predictive visual inference.Sci. Adv.11,eadr6698(2025).DOI:10.1126/sciadv.adr6698

Friston, K., Rosch, R., Parr, T., Price, C., & Bowman, H. (2017). Deep temporal models and active inference. Neuroscience and Biobehavioral Reviews, 77, 388 - 402. https://doi.org/10.1016/j.neubiorev.2017.04.009.

Kirschner, P. (2025, June 20). When failure feeds the flame. Substack. https://substack.com/home/post/p-166380436

Lesicko, A. M. H., Angeloni, C. F., Blackwell, J. M., De Biasi, M., & Geffen, M. N. (2022). Corticofugal regulation of predictive coding. eLife, 11, e73289. https://doi.org/10.7554/eLife.73289

Mikulasch, F. A., Rudelt, L., Wibral, M., & Priesemann, V. (2023). Where is the error? Hierarchical predictive coding through dendritic error computation. Trends in Neurosciences, 46(1), 45–59. https://doi.org/10.1016/j.tins.2022.09.007

Sweller, J. The Development of Cognitive Load Theory: Replication Crises and Incorporation of Other Theories Can Lead to Theory Expansion. Educ Psychol Rev 35, 95 (2023). https://doi.org/10.1007/s10648-023-09817-2

Vezoli J, Magrou L, Goebel R, Wang XJ, Knoblauch K, Vinck M, Kennedy H. Cortical hierarchy, dual counterstream architecture and the importance of top-down generative networks. Neuroimage. 2021 Jan 15;225:117479. doi: 10.1016/j.neuroimage.2020.117479. Epub 2020 Oct 21. PMID: 33099005; PMCID: PMC8244994.

Walsh KS, McGovern DP, Clark A, O'Connell RG. Evaluating the neurophysiological evidence for predictive processing as a model of perception. Ann N Y Acad Sci. 2020 Mar;1464(1):242-268. doi: 10.1111/nyas.14321. Epub 2020 Mar 8. PMID: 32147856; PMCID: PMC7187369.

Main players / sources

Karl Friston, Prof. Neuroscience at UCL, is perhaps the most central figure in the development of predictive processing. Friston’s work on the “free energy principle” posits that biological systems, like the brain, strive to minimise the difference between predicted and actual sensory inputs. His theoretical contributions provide a unifying framework based on physics concepts, that has been widely influential in neuroscience and cognitive science.

Andy Clark, Prof. Cognitive Philosophy University of Sussex, has been a major influence in this field, broadening the scope into how many aspects of cognition and consciousness can be supported with the predictive processing model. He is also strongly developing the philosophical aspects of embedded cognition.

Anil Seth, Cognitive & Computational Neuroscience University of Sussex, has also been shaping the debate on predictive processing. His focus has been on understanding consciousness, and integrating neurology and imaging, through to how computational models can be developed to demonstrate and use the related theories.

Royal Faraday Lecture: – 26th March 2024

Chris Frith, Emeritus Professor of Neuropsychology University College London, has developed predictive processing in the area of social cognition and relationship with others, as well as using it to understand mental illness including schizophrenia.

Uta Frith, Emeritus Professor of Cognitive Development University College London, have been key in exploring how predictive processing relates to social Cognition, and in relation to autism and dyslexia.

Lisa Barret Feldman, Prof of Psychology North Eastern University, has contributed by applying ideas of predictive processing in the area of emotional cognition.

Jakob Hohwy, Prof. of Philosophy, Monash University, has developed the field of predictive processing, providing thinking on how the how predictive processing can account for the phenomenological aspects of conscious experience, and has done much work on producing empirical testing and evidence to support the theories.

Peter Vermeulen, Educationalist Autism in Context, has done an excellent job in interpreting predictive processing, and expanding on how it can be applied to understanding neurodivergent, particularly autistic people.

Selected Research Papers on predictive processing

Clark A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav Brain Sci. 2013 Jun;36(3):181-204. doi: 10.1017/S0140525X12000477. Epub 2013 May 10. PMID: 23663408.

Friston, K. The free-energy principle: a unified brain theory?. Nat Rev Neurosci 11, 127–138 (2010). https://doi.org/10.1038/nrn2787

Clark A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences. 2013;36(3):181-204. doi:10.1017/S0140525X12000477

Seth, A. K., & Hohwy, J. (2021). Predictive processing as an empirical theory for consciousness science. Cognitive Neuroscience, 12(2), 89–90. https://doi.org/10.1080/17588928.2020.1838467

Vezoli, J., Magrou, L., Goebel, R., Wang, X.J., Knoblauch, K., Vinck, M. and Kennedy, H., 2021. Cortical hierarchy, dual counterstream architecture and the importance of top-down generative networks. Neuroimage, 225, p.117479.

Edelson, Edward H. (2005). “Checkershadow Illusion”. Perceptual Science Group. MIT. Retrieved 2007-04-21.

PAPERS ON PREDICT PROCESSING IN EDUCATION

Sanchez, S. (2021). Embodied Learning: Capitalizing on Predictive Processing. In: Arai, K. (eds) Intelligent Computing. Lecture Notes in Networks and Systems, vol 285. Springer, Cham. https://doi.org/10.1007/978-3-030-80129-8_34

Köster, M., Kayhan, E., Langeloh, M., & Hoehl, S. (2020). Making Sense of the World: Infant Learning From a Predictive Processing Perspective. Perspectives on Psychological Science, 15(3), 562-571. https://doi.org/10.1177/1745691619895071

May, C. J., Wittingslow, R., & Blandhol, M. (2022). Provoking thought: A predictive processing account of critical thinking and the effects of education. Educational Philosophy and Theory, 54(14), 2458–2468. https://doi.org/10.1080/00131857.2021.2006056

A fantastic post! 🙏Prediction is also a huge part of our formative action approach, where let teachers make predictions before they engage in formative assessment practices

. https://formative-action.com/the-action-oriented-investigation-process/