Rethinking the Transient Information Effect: From Cognitive Load to Predictive Processing

Reframing the transient information effect using Predictive Processing / Active Inference theory clarifies why the effect happens, and why the strategies to minimise the effect work.

In a recent blog on the Science of Learning Substack, The Transient Information Effect: Why Great Explanations Don’t Always Stick, Nidhi Sachdeva and Jim Hewitt interview John Sweller, who explains the phenomenon through the lens of Cognitive Load Theory (CLT).

This prompted me to reflect on why I think CLT — and, more broadly, the information-processing model of human cognition — is limited in how it accounts for this important effect. I believe that Predictive Processing / Active Inference theory, now the dominant framework in cognitive neuroscience, provides a richer and more accurate foundation for understanding what is really happening when transient information disrupts learning.

(For a primer on Predictive Processing / Active Inference and its applications to education, see my blog Teachers are Prediction Error Managers. For a discussion of why we should be moving from the information-processing model and CLT to a predictive mind paradigm, see From Load to Prediction.)

What’s happening when the Transient Information Effect occurs?

In the interview, Sweller was asked:

What is happening in working memory when the Transient Information Effect occurs?

Here I’d like to reframe that question:

What is happening according to Predictive Processing / Active Inference when the Transient Information Effect occurs?

From a Predictive Processing / Active Inference perspective, the Transient Information Effect is not about “working memory being overloaded.” It is about what happens when the brain tries to test and update its predictions, but the evidence it needs disappears too quickly.

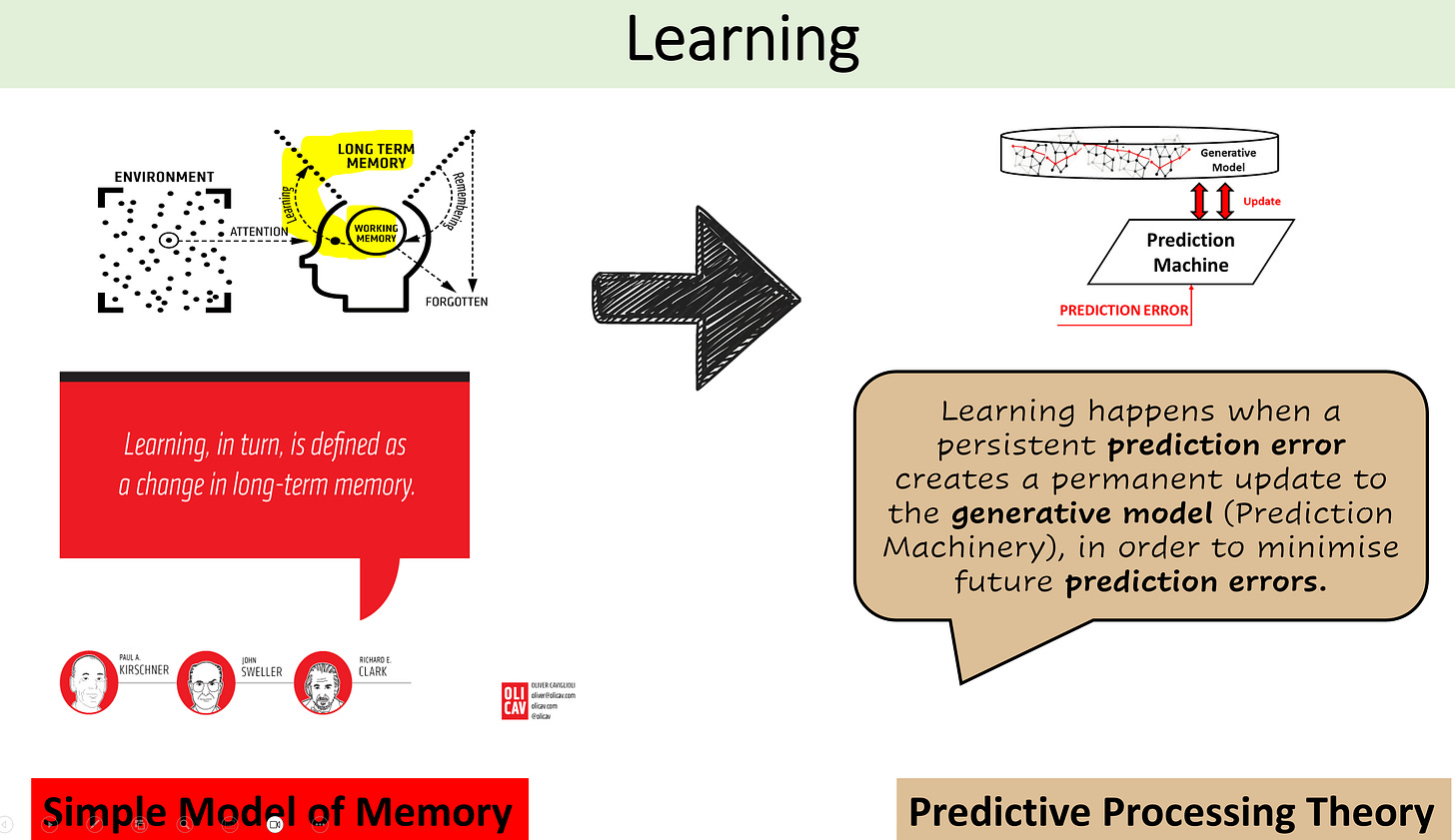

Learning as prediction and error correction

The brain constantly generates predictions about what it expects to hear or see, and checks them against incoming information. When a mismatch occurs, a prediction error is generated. This error drives the brain either to select a better-fitting prior, or to update its generative model of the world.

For genuine learning to occur, the brain must encounter a prediction error that it cannot resolve with its existing priors. At that point, it works to infer a better model — perhaps by re-weighting existing priors (similar to adjusting a schema), or by actively sampling the environment to find a better statistical fit.

A revised model is reinforced and embedded only if it has been reactivated successfully. When the same or similar situation arises, the updated model should then generate low prediction error, confirming the update was worthwhile.

The problem with transient input

When information is fleeting — a spoken sentence or a step in an animation — the sensory evidence disappears before the brain has fully used the prediction error to update its model. Unlike a diagram or a piece of text that stays visible, transient input cannot be re-sampled. The learner loses the chance to check and refine their predictions.

Unresolved prediction errors

Because the evidence has gone, the cycle of prediction → error → update is interrupted. Errors remain unresolved, leaving understanding fragile and incomplete.

Why permanence matters

Permanent resources — diagrams, written steps, recordings — allow learners to return, re-check, and re-sample information. Each revisit offers another opportunity to resolve prediction errors. This is why strategies such as pausing, revisiting, or giving learners control over pacing are so effective: they enable the complete prediction–error resolution loop that transient information otherwise disrupts.

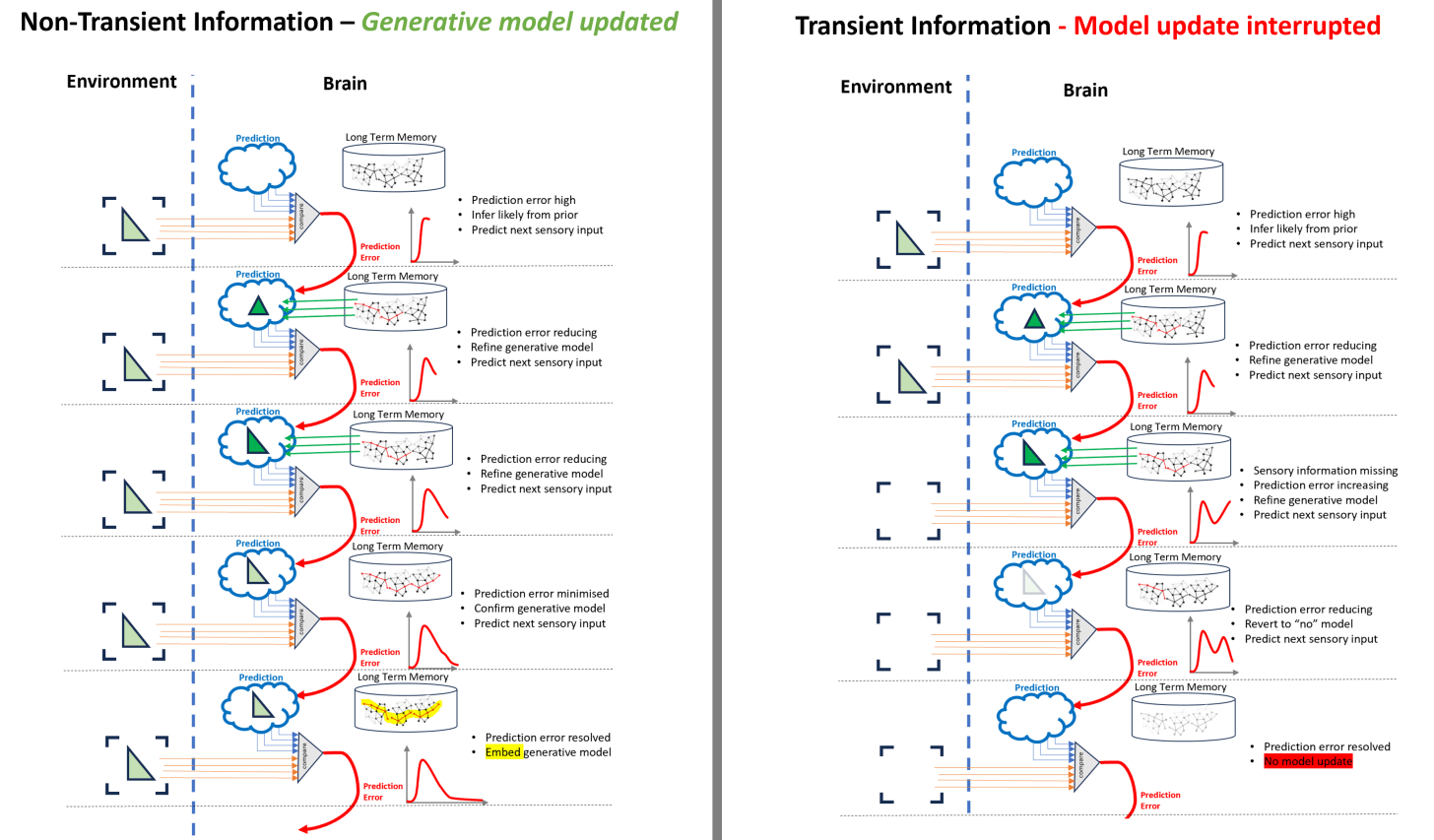

The following diagram illustrates what is happening with transient and non-transient information flow. It’s a challenging thing to illustrate!

Prior knowledge and expertise reversal

CLT researchers such as Sweller have consistently found that prior knowledge has a major effect, the so-called expertise reversal effect. Learners with strong prior knowledge can handle complexity that overwhelms novices.

From a Predictive Processing / Active Inference perspective, this is straightforward. If a learner’s generative model can already predict the incoming sensory input, there is little or no prediction error to resolve — so the transient effect does not appear. Conversely, the concept of desirable difficulty is also easy to explain: for a generative model to update, there must be prediction error. Stronger errors produce stronger learning signals, but the brain also has limits on the bandwidth of prediction errors and needs time to integrate updates.

Why Teacher Strategies Work

The key to overcoming the Transient Information Effect is to ensure that students can keep sampling evidence long enough to resolve their prediction errors. Common classroom strategies achieve this in different ways:

a) Visual permanence

Diagrams, written steps, or keywords remain in view.

Students can re-sample evidence repeatedly, testing predictions until errors are resolved.

Each revisit stabilises their internal model.

While some resampling can occur “on the fly,” pauses allow sensory revisiting to allow generative models to become confirmed and tagged for embedding.

b) Teacher repeats

Rephrasing or restating “re-releases” evidence into the environment.

Students gain extra prediction–error cycles, especially valuable when priors are weak.

Repetition helps support model updating that would otherwise be cut off.

c) Chunking

Segmenting information reduces the number of prediction errors arriving at once.

Each chunk can be processed and stabilised before moving on.

Keeps inference within a manageable zone, preventing overload.

d) Pausing for integration

Pauses provide time for re-sampling, mental replay, and checking understanding.

By slowing the arrival of new errors, pauses give the model time to settle.

This creates conditions for deeper integration.

e) Student rehearsal

Rehearsal forces a re-prediction: the student must generate the content again from their own model.

Crucially, accuracy feedback is essential:

In choral response, students instantly hear whether their prediction aligns with peers, and teachers hear discordances to address immediately.

In cold calling, using the “everyone thinks” approach (question–pause–name) ensures all students compare their predictions with the correct answer.

In turn-and-talk, scaffolding the dialogue, sampling responses, and highlighting misconceptions ensures precision.

In written rehearsal, students may assume success simply by producing an answer; checks for understanding and whole-class feedback are vital to reinforce only correct predictions.

The I Do / We Do / You Do model works in the same way:

I Do: The teacher models the correct prediction, reducing uncertainty.

We Do: Students attempt a shared re-prediction, immediately comparing with teacher and peer feedback.

You Do: Students generate predictions independently, with precision checked through feedback.

In Predictive Processing / Active Inference terms: the higher the precision (confidence in the correctness of the signal), the stronger the update. Without feedback, learners risk strengthening the wrong priors.

f) Student note-making

Notes transform transient input into a permanent, student-owned artefact.

The value depends on the precision of the notes. Poorly structured or inaccurate notes risk reinforcing error.

Teacher modelling of note structures, exemplars, or guided scaffolds helps ensure that student notes provide reliable evidence for later re-sampling.

g) Student-controlled pacing and reference materials

Providing knowledge organisers, guided notes, textbooks, or recorded videos allows students to pause, rewind, and revisit at their own pace.

This extends permanence beyond the live classroom and ensures prediction errors can be resolved even after the lesson.

Crucially, it hands control of sampling policies to the learner: they decide what to re-check, how often, and in what order.

From a Predictive Processing /Active Inference perspective, this restores the full prediction–error cycle that transient classroom input can cut off. The learner isn’t locked into the teacher’s pace; they can manage their own flow of prediction errors and updates.

In summary

From a Predictive Processing / Active Inference perspective, the Transient Information Effect is not about memory limits but about interrupted inference loops. Fleeting information vanishes before prediction errors can be resolved, leaving learners unable to update their generative models.

Teaching strategies that provide permanence, allow revisiting, and ensure precise feedback work because they give students the chance to complete the cycle of prediction, error, and update. In this way, Predictive Processing / Active Inference offers not only a more neurally plausible explanation than CLT, but also a clearer rationale for why specific teaching practices are effective in the classroom.

We see it in ourselves in the way we interact with both written and spoken word, of course. We're constant prognosticators. We fill in the blanks. We also reinvent wheels with stunning regularity.

Thinking in terms of predictive processing seems to support more classical forms of "I do, we do, you do" such as using a well-wrought, clear problem-solution set from a math teacher to explicitly and visually lead a long set of practice problems with an very high degree of similarity and explicit steps. Working one problem and assigning an exit ticket is not sufficient. Ten, or thirty, would be better.

Old-school copywork is another method that provides immediate feedback and high-quality course correction considered relative to the wider language aspects of predictive processing. As it stands, we tend to ask for context to be applied by students where there is little or none, relatively speaking. We ask them to fill in the blanks out of thin air. Ex nihilo nihil fit, however.

This article provides much food for thought. Thank you.

It could be that my prior learning means that this doesn’t sit comfortably with me, as after 2/3 reads I don’t feel I have enough prior learning to judge the full efficacy of this proposition. I appreciate the intellect within the writing. If not the this is better than that premise. Which feels unnecessarily reductive.As a teacher here I read of abstracts, concepts but not practice, classroom commitment to learning and the development of connections. Where is the example that might help this embed itself in memory to have a road to follow to deploy predictive questioning? I see stacked up techniques but not road to drive down.Teachers love to share. Desire to inspire. But this abstraction as to which theory is better? Is that our Hippocratic Oath? Where’s the learning for a classroom teacher there? Where’s the example of this happening inside a classroom in a way that’s graspable for understanding.For practical application? So I worry that this is not talking to teachers but at them. Not talking with teachers but for them. Tell me why this matters for those of us walking through the door into classrooms next week? To breathe and grow learning needs challenge. Happy to know more.